Windows’ memory management—specifically its use of RAM and the pagefile—has been a subject of concern and confusion since NT 3.1 first shipped. To be sure, there is some reason for concern. We worry about RAM because we know if there isn’t enough, the system will slow down, and will page more to disk. And that’s why we worry about the page file.

(There is also reason for confusion. Memory management in any modern operating system is a complex subject. It has not been helped by Microsoft’s ever-changing choice of nomenclature in displays like Task Manager.)

Today, RAM is just unbelievably cheap by previous standards. And Task Manager’s displays have gotten a lot better. That “memory” graph really does show RAM usage now (in Vista and 7 they made it even more clear: “Physical Memory Usage”), and people are commonly seeing their systems with apparently plenty of what Windows calls “available” RAM. (More on that in a later article.) So users and admins, always in pursuit of the next performance boost, are wondering (not for the first time) if they can delete that pesky old page file. After all, keeping everything in RAM just has to be faster than paging to disk, right? So getting rid of the page file should speed things up! Right?

You don’t get any points for guessing that I’m going to say “No, that’s not right.”

You see, eliminating the page file won’t eliminate paging to disk. It likely won’t even reduce the amount of paging to disk. That is because the page file is not the only file involved in virtual memory! Not by far.

Types of virtual memory

There are three categories of “things” (code and data) in virtual memory. Windows tries to keep as much of all them in RAM as it can.

Nonpageable virtual memory

The operating system defines a number of uses of virtual memory that are nonpageable. As noted above, this is not stuff that Windows “tries to keep in RAM”—Windows has no choice; all of it must be in RAM at all times. These have names like “nonpaged pool,” “PFN database,” “OS and driver code that runs at IRQL 2 or above,” and other kernel mode data and code that has to be accessed without incurring page faults. It is also possible for suitably privileged applications to create some nonpageable memory, in the form of AWE allocations. (We’ll have another blog post explaining AWE.) On most systems, there is not much nonpageable memory.

(“Not much” is relative. The nonpageable memory alone on most Windows systems today is larger than the total RAM size in the Windows 2000 era!)

You may be wondering why it’s called “virtual memory” if it can’t ever be paged out. The answer is that virtual memory isn’t solely about paging between disk and RAM. “Virtual memory” includes a number of other mechanisms, all of which do apply here. The most important of these is probably address translation: The physical—RAM—addresses of things in nonpageable virtual memory are not the same as their virtual addresses. Other aspects of “virtual memory” like page-level access protection, per-process address spaces vs. the system-wide kernel mode space, etc., all do apply here. So this stuff is still part of “virtual memory,” and it lives in “virtual address space,” even though it’s always kept in RAM.

Pageable virtual memory

The other two categories are pageable, meaning that if there isn’t enough RAM for everything to stay in RAM all at once, parts of the memory in these categories (generally, the parts that were referenced longest ago) can be kept or left out on disk. When it’s accessed, the OS will automatically bring it into RAM, possibly pushing something else out to disk to make room. That’s the essence of paging. It’s called “paging,” by the way, because it’s done in terms of memory “pages,” which are normally just 4K bytes… although most paging I/O operations move many pages at once.

Collectively, the places where virtual memory contents are kept when they’re not in RAM are called “backing store.” The second and third categories of virtual memory are distinguished from each other by two things: how the virtual address space is requested by the program, and where the backing store is.

Committed memory

One of these categories is called “committed” memory in Windows. Or “private bytes,” or “committed bytes,” or ‘private commit”, depending on where you look. (On the Windows XP Task Manager’s Performance tab it was called “PF usage,” short for “page file usage,” possibly the most misleading nomenclature in any Windows display of all time.) In Windows 8 and Windows 10’s Task Manager “details” tab it’s called “Commit size.”

Whatever it’s called, this is virtual memory that a) is private to each process, and b) for which the pagefile is the backing store. This is the pagefile’s function: it’s where the system keeps the part of committed memory that can’t all be kept in RAM.

Applications can create this sort of memory by calling VirtualAlloc, or malloc(), or new(), or HeapAlloc, or any of a number of similar APIs. It’s also the sort of virtual memory that’s used for each thread’s user mode stack.

By the way, the sum of all committed memory in all processes, together with operating-system defined data that is also backed by the pagefile (the largest such allocation is the paged pool), is called the “commit charge.” (Except in PerfMon where it’s called “Committed bytes” under the “memory” object. ) On the Windows XP Task Manager display, that “PF usage” graph was showing the commit charge, not the pagefile usage.

A good way to think of the commit charge is that if everything that was in RAM that’s backed by the pagefile had to be written to the pagefile, that’s how much pagefile space it would need.

So you could think of it as the worst case pagefile usage. But that almost never happens; large portions of the committed memory are usually in RAM, so commit charge is almost never the actual amount of pagefile usage at any given moment.

Mapped memory

The other category of pageable virtual memory is called “mapped” memory. When a process (an application, or anything else that runs as a process) creates a region of this type, it specifies to the OS a file that becomes the region’s backing store. In fact, one of the ways a program creates this stuff is an API called MapViewOfFile. The name is apt: the file contents (or a subset) are mapped, byte for byte, into a range of the process’s virtual address space.

Another way to create mapped memory is to simply run a program. When you run an executable file the file is not “read,” beginning to end, into RAM. Rather it is simply mapped into the process’s virtual address space. The same is done for DLLs. (If you’re a programmer and have ever called LoadLibrary, this does not “load” the DLL in the usual sense of that word; again, the DLL is simply mapped.) The file then becomes the backing store—in effect, the page file—for the area of address space to which it is mapped. If all of the contents of all of the mapped files on the system can’t be kept in RAM at the same time, the remainder will be in the respective mapped files.

This “memory mapping” of files is done for data file access too, typically for larger files. And it’s done automatically by the Windows file cache, which is typically used for smaller files. Suffice it to say that there’s a lot of file mapping going on.

With a few exceptions (like modified pages of copy-on-write memory sections) the page file is not involved in mapped files, only for private committed virtual memory. When executing code tries to access part of a mapped file that’s currently paged out, the memory manager simply pages in the code or data from the mapped file. If it ever is pushed out of memory, it can be written back to the mapped file it came from. If it hasn’t been written to, which is usually the case for code, it isn’t written back to the file. Either way, if it’s ever needed again it can be read back in from the same file.

A typical Windows system might have hundreds of such mapped files active at any given time, all of them being the backing stores for the areas of virtual address space they’re mapped to. You can get a look at them with the SysInternals Process Explorer tool by selecting a process in the upper pane, then switching the lower pane view to show DLLs.

So…

Now we can see why eliminating the page file does not eliminate paging to and from disk. It only eliminates paging to and from the pagefile. In other words, it only eliminates paging to and from disk for private committed memory. All those mapped files? All the virtual memory they’re mapped into? The system is still paging from and to them…if it needs to. (If you have plenty of RAM, it won’t need to.)

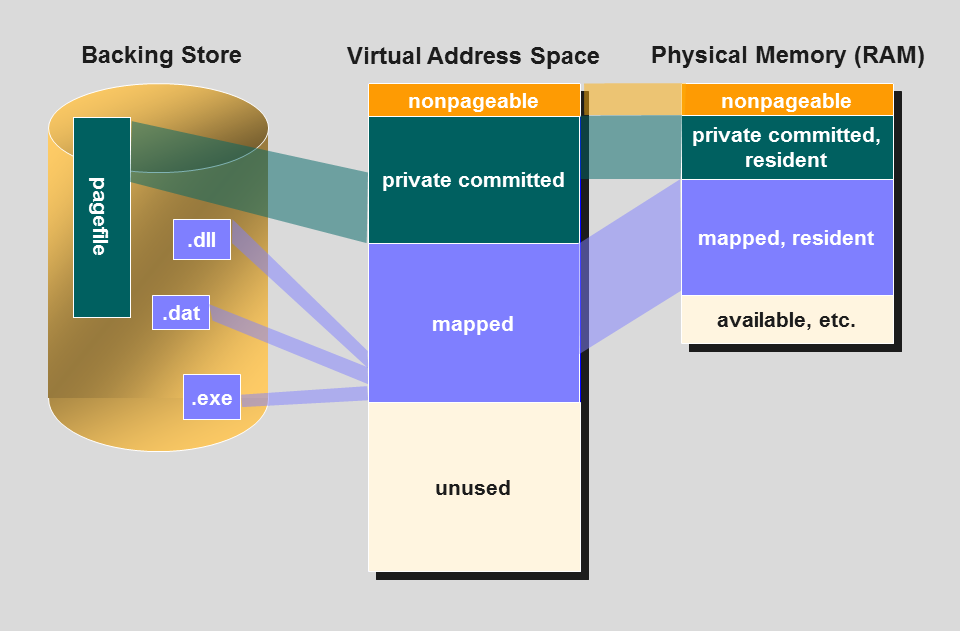

The following diagram shows, in greatly oversimplified and not-necessarily-to-scale fashion, the relationship between virtual address space, RAM, and the various backing stores. All of nonpageable virtual space is, of course, in RAM. Some portion of the private committed address space is in RAM (“resident”); the remainder is in the pagefile. Some portion of the mapped address space is also in RAM; the remainder being in all the files to which that address space is mapped. The three mapped files—one .dat, one .dll, one .exe—are, of course, representative of the hundreds of mapped files in a typical Windows system.

A matter of balance

So that’s why removing the pagefile doesn’t eliminate paging. (Nor does it turn off or otherwise get rid of virtual memory.) But removing the pagefile can actually make things worse. Reason: you are forcing the system to keep all private committed address space in RAM. And, sorry, but that’s a stupid way to use RAM.

One of the justifications, the reason for existence, of virtual memory is the “90-10” rule (or the 80-20 rule, or whatever): programs (and your system as a whole) spend most of their time accessing only a small part of the code and data they define. A lot of processes start up, initialize themselves, and then basically sit idle for quite a while until something interesting happens. Virtual memory allows the RAM they’re sitting on to be reclaimed for other purposes until they wake up and need it back (provided the system is short on RAM; if not, there’s no point).

But running without a pagefile means the system can’t do this for committed memory. If you don’t have a page file, then all private committed memory in every process, no matter how long ago accessed, no matter how long the process has been idle, has to stay in RAM—because there is no other place to keep the contents.

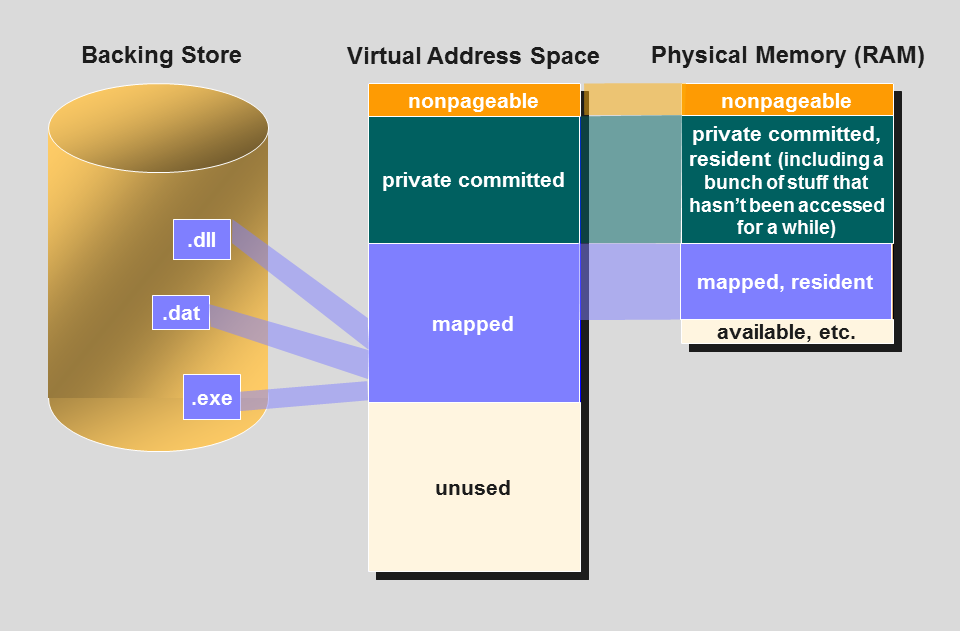

That leaves less room for code and data from mapped files. And that means that the mapped memory will be paged more than it would otherwise be. More-recently-accessed contents from mapped files may have to be paged out of RAM, in order to have enough room to keep all of the private committed stuff in. Compare this diagram with the one previous:

Now that all of the private committed v.a.s. has to stay resident, no matter how long ago it was accessed, there’s less room in RAM for mapped file contents. Granted, there’s no pagefile I/O, but there’s correspondingly more I/O to the mapped files. Since the old stale part of committed memory is not being accessed, keeping it in RAM doesn’t help anything. There’s also less room for a “cushion” of available RAM. This is a net loss.

You might say “But I have plenty of RAM now. I even have a lot of free RAM. However much of that long-ago-referenced private virtual memory there is, it must not be hurting me. So why can’t I run without a page file?”

“Low on virtual memory”; “Out of virtual memory”

Well, maybe you can. But there’s a second reason to have a pagefile:

Not having a pagefile can cause the “Windows is out of virtual memory” error, even if your system seems to have plenty of free RAM.

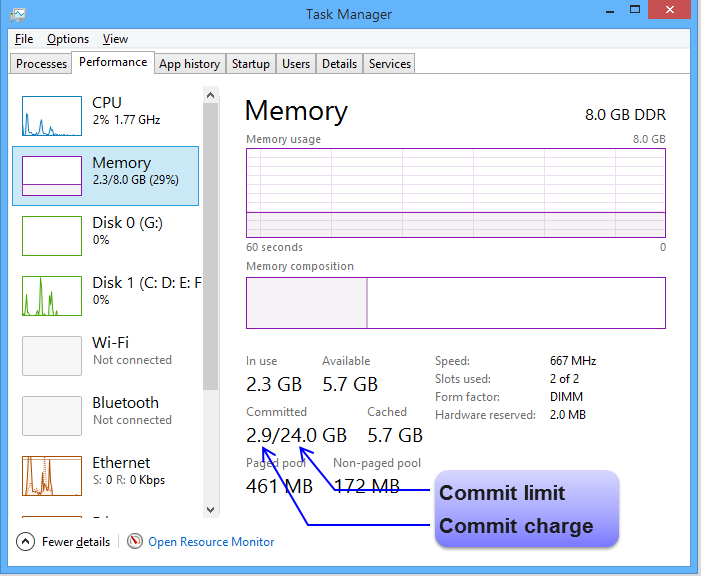

That error pop-up happens when a process tries to allocate more committed memory than the system can support. The amount the system can support is called the “commit limit.” It’s the sum of the size of your RAM (minus a bit to allow for the nonpageable stuff) plus the current size of your page file.

All processes’ private commit allocations together, plus some of the same stuff from the operating system (things like the paged pool), are called the “commit charge.” Here’s where you can quickly see the commit charge and commit limit on windows 8 and 10:

Note: In Performance Monitor, these counters are called Memory\Committed bytes and Memory\Commit Limit. Each process’s contribution to the commit charge is in Process\(process)\Private Bytes. The latter is the same counter that Task Manager’s Processes tab (Windows 7) or Details tab (Windows 8 through 10) calls Commit Size.

When any process tries to allocate private virtual address space, Windows checks the size of the requested allocation plus the current commit charge against the commit limit. If the commit limit is larger than that sum, the allocation succeeds; if the commit limit is smaller than that sum, then the allocation cannot be immediately granted. But if the pagefile can be expanded (in other words, if you have not set its default and maximum size to the same), and the allocation request can be accommodated by expanding the pagefile, the pagefile is expanded and the allocation succeeds. (This is where you would see the “system is running low on virtual memory” pop-up. And if you checked it before and after, you’d see that the commit limit is increased.)

If the pagefile cannot be expanded enough to satisfy the request (either because it’s already at its upper size limit, or there is not enough free space on the disk), or if you have no pagefile at all, then the allocation attempt fails. And that’s when you see the “system is out of virtual memory” error. (Changed to simply “out of memory” in Windows 10. Not an improvement, Microsoft!)

The reason for this has to do with the term “commit.” The OS will not allow a process to allocate virtual address space, even though that address space may not all be used for a while (or ever), unless it has a place to keep the contents. Once the allocation has been granted, the OS has committed to make that much storage available.

For private committed address space, if it can’t be in RAM, then it has to be in the pagefile. So the “commit limit” is the size of RAM (minus the bit of RAM that’s occupied by nonpageable code and data) plus the current size of the pagefile. Whereas virtual address space that’s mapped to files automatically comes with a place to be stored, and so is not part of “commit charge” and does not have to be checked against the “commit limit.”

Remember, these “out of memory” errors have nothing to do with how much free RAM you have. Let’s say you have 8 GB RAM and no pagefile, so your commit limit is 8 GB. And suppose your current commit charge is 3 GB. Now a process requests 6 GB of virtual address space. (A lot, but not impossible on a 64-bit system.) 3 GB + 6 GB = 9 GB, over the commit limit, so the request fails and you see the “out of virtual memory” error.

But when you look at the system, everything will look ok! Your commit charge (3 GB) will be well under the limit (8 GB)… because the allocation failed, so it didn’t use up anything. And you can’t tell from the error message how big the attempted allocation was.

Note that the amount of free (or “available”) RAM didn’t enter into the calculation at all.

So for the vast majority of Windows systems, the advice is still the same: don’t remove your pagefile.

If you have one and don’t need it, there is no cost. Having a pagefile will not “encourage” more paging than otherwise; paging is purely a result of how much virtual address space is being referenced vs. how much RAM there is.

If you do need one and don’t have it, applications will fail to allocate the virtual memory they need, and the result (depending on how carefully the apps were written) may well be unexpected process failures and consequent data loss.

Your choice.

What about the rest? Those not in the vast majority? This would apply to systems that are always running a known, unchanging workload, with no changes to the application mix and no significant changes to the data being handled. An embedded system would be a good example. In such systems, if you’re running without a pagefile and you’ve never seen “out of virtual memory” for a long time, you’re unlikely to see it tomorrow. But there’s still no benefit to removing the pagefile.

What questions do you have about Windows memory management? Ask us in the comments! We’ll of course be discussing these and many related issues in our public Windows Internals seminars, coming up in May and July.

Stack space is initially reserved then committed as necessary. See http://msdn.microsoft.com/en-us/library/windows/desktop/ms686774%28v=vs.85%29.aspx

Thank you for the comment! That is absolutely correct, and when we talk about VirtualAlloc and committed vs. reserved v.a.s. in our internals seminars (shameless plug!) we do use the user mode stack as an example.

But for the purposes of this article I chose not to address that, or several other details for that matter; one is always trying to keep articles as short as possible, and I decided that those details would not have made the argument for the conclusion any stronger.

Thing is, stack space is germane to this discussion. With a page file, stack space can be reserved and not committed. Without a page file, all stack space has to be committed at the start of the thread, whether it is used or not. In that state, creating a thread is a touch more likely to fail; and requires all the stack memory to be committed immediately, whether it is used or not. Lots of threads would mean lots of memory is being committed but never used.

Sorry, but no… reserving v.a.s. (for the stack or otherwise) does not require a pagefile, nor does it affect commit charge. A reserved region simply needs a Virtual Address Descriptor that says “this range of Virtual Page Numbers is reserved.” No pagefile space is needed. This is easily demonstrated with testlimit.

OK, so in this age of SSD (which is costly, so people size as low as they feel they can get by with), how much freespace, relative to installed RAM, would you recommend people leave available for pagefile and hiberfil?

For context, I’m getting questions like “If I have 16GB of RAM and I relocate my user profile directory and all data storage to a second drive, can I get away with a 32GB SSD for Windows?”

For the hibernate file, you don’t really have a choice: It needs to be the size of RAM. That’s what the OS will allocate for it if you enable hibernation. If you don’t want that much space taken up by the hibernate file, your only option is to not enable hibernation.

For the pagefile, my recommendation has long been that your pagefile’s default or initial size should be large enough that the performance counter Paging file | %usage (peak) is kept below 25%. My rationale for this is that the memory manager tries to aggregate pagefile writes into large clusters, the clusters have to be virtually contiguous within the pagefile, and internal space in the pagefile is managed like a heap; having plenty of free space in the page file is the only thing we can do to increase the likelihood of large contiguous runs of blocks being available within the pagefile.

The above is not a frequently expressed opinion; I should probably expand it to a blog post.

Re “relative to installed RAM”: sizing the pagefile to 1.5x or 1x the size of RAM is simply what Windows does at installation time. It was never intended to be more than an estimate that would almost always result in a pagefile that’s large enough, with no concern That it might be much larger than it needed to be. Note that the only cost of a pagefile of initial size “much larger than it needs to be” is in the disk (or SSD) space occupied. It was not that long ago that hard drives cost (in $ per GB) about what SSDs do now, so I don’t see that the cost of SSD is a factor.

I’m not sure how free space on the disk enters into it, except where allowing pagefile expansion is concerned. The above suggestion is for the default or initial size. I see no reason to limit the maximum size at all.

I do agree that SSD becomes more affordable every day. Still, I often see people trying to use the least amount of SSD possible. (For context, I help a lot of people in an IRC channel about Windows.) So I’m trying to develop a rule of thumb for them.

Given what you said, it seems like the answer would be something like this: 1) A default installation of Windows 8.1 will typically use around 14GB of space, but with updates and so on could reasonably grow to 25GB. 2) the hiberfil will be the size of RAM and 3) you should leave at least 1.5x RAM disk space available for pagefile.

So. If we have 16GB RAM, then allow 1) 25GB for Windows 2) 16GB for hiberfil and 3) 24GB for pagefile. Which means one should set aside at least a 65GB partition for Windows’ C: drive – and this is before thinking about how much space will be needed for applications and data.

Or to put it another way. If (at default pagefile settings) freespace + hiberfil + pagefile is less than 2.5x amount of RAM in the system, “out of virtual memory” errors are just one memory-hungry application away. The likelihood of this error goes down, the more freespace one leaves on the disk.

To clarify, I was not defending or promoting the “1.5x RAM” idea for pagefile initial size, just explaining it. Windows’ use of it at installation time (it’s actually 1x in later versions) is based on the notion that installed RAM will be approximately scaled to workload: Few people will buy 16 GB RAM for a machine to be used for light Office and web browsing use, and few will install just 2 GB RAM where the workload will include 3d modeling or video editing.

But my experience is that if you suggest “some factor times size of RAM” as a rule to be followed, you will get pushback: “But with more RAM you should need less pagefile space, not more!” And if the workload is the same, that’s completely true.

I would also phrase things differently re. leaving disk space “available” for the pagefile. One should set the initial pagefile size to a value that will be large enough. This allocates disk space to the pagefile, it does not leave it “available.” As stated before, my metric for “large enough” is “large enough that no more than 25% pagefile space is used under maximum actual workload”.

The only way free space on the disk should be involved or considered w.r.t. the pagefile size is in enabling pagefile expansion, i.e. setting the maximum size larger than the initial. Now, if the initial size is large enough, the pagefile will never have to be expanded, so enabling expansion would seem to do nothing. But it provides a zero-cost safety net, which will save you in case you initial size turns out to be not large enough. And of course pagefile expansion is ultimately limited by the free space on the disk.

Thanks for your thoughts on the matter, Jamie!

Just to clarify the intent of question a little, our general advice about pagefile settings is to leave them alone. System-managed all the way. Our hope is that this will remove the urge to limit or remove pagefile completely. Your idea of setting an initial size but no maximum is interesting; we’ll consider changing our advice! We do heavily stress that aside from (potential) disk space usage, there’s no downside to allowing pagefile to grow to whatever size it wants to have. As I’m sure you’re aware, this is somehow counterintuitive to quite a few people!

So, given that and the basic question “how much disk space should I allow for the OS?” I wanted to be able to give a relatively safe rule of thumb for sizing the original OS partition. I’ll still say something like “sure, you can probably get away with less, but the smaller you make it, the more likely you’ll later find yourself in a pickle”.

I know more about the craters on the moon than I know about the memory issues on my computer.

So, hopefully someone out there can help me understand this and maybe suggest a fix.

I have Windows 7 on my Dell laptop. I have 750gig hard drive. A month or so ago I checked the used space on my hard drive and I had used just shy of 50% of space.

Now, I am done to less than 50mb! I have no idea where all the memory went. Lately, every time I boot the laptop on I’m getting the message that the system has created a paging file and as I’m on the laptop the error message pops up saying low disc space (it actually just popped up).

I’ve off-load maybe 5gbs of files only to have the low disc space message pop up an hour later.

I have not loaded anything new on the laptop (not that I know of) prior to the memory loss.

I have run multiple virus scan, but they have come up empty.

It’s difficult to even be on email at this point.

I don’t know enough to have programed it to have altered its setup that could have led to the vanishing memory.

The only thing that I have done – as suggested on other blog sites, is to delete old restore points. That didn’t do anything.

What eat over 300gigs of memory? How do I stop it and how do I get that memory back?

Any guidance would be greatly appreciated.

Thank you.

Hi. First, let us say that we sympathize – this sort of thing can be very frustrating.

This article doesn’t really address hard drive space, but rather virtual address space and physical memory (i.e. RAM). It sounds as if something in your system is furiously writing to your hard drive – other than the creation of the pagefile. The space on the hard drive is not usually thought of as “memory.”

To track this sort of thing down, my first stop would be Task Manager. Right-click on an empty part of your taskbar and click “Start Task Manager”. Select the “Processes” tab. Then go to the View menu, and click “Select Columns”. Check the box for “I/O Writes”. OK. Oh, and click the “Show processes from all users” button at the bottom. Finally, click on the “I/O Writes” column head so that this column is sorted with the largest value at the top. Unfortunately this shows the total number of writes, not the rate. But it’s a start. If you see one of these ticking up rapidly, that’s a process to look at.

A better tool might be the “Resource Monitor”, which you can get to from Task Manager’s “Performance” tab. Click the “Resource Monitor” button near the bottom. In Resource Monitor, select the “Disk” tab. In this display you already have columns for read and write rates, in bytes/sec. Click the “Write (B/sec)” column head so that the largest values in this column are at the top. Now, the process at the top might be “System”; if so, that is due to how the Windows file cache works. But the thing to look for is the non-“System” processes that are doing a lot of writes, even when you think your system should be quiet.

Still in Resource Monitor: If you expand the “Disk Activity” portion of the display you’ll see the I/O rates broken down by file.

There are some utilities out there, some free, some not, to help you find where all the space is going. The first one that came up in my Google search for “disk space analyzer” is “TreeSize Free”, which gives an Explorer-like display of the tree of directories, but with each annotated with the total size at and below that point. Another is “WinDirStat”, which gives a much more graphical view. This seems to be something a lot of people want help with; the search results show two articles at LifeHacker in the last few years on such software. Try a few of the free ones and see what they tell you.

Finally, I would not so much look for malware like viruses (malware these days tries pretty hard to avoid notice, and filling up your disk space is something most people notice), but just buggy software. (Of course, malware can be buggy…) I recently traced a similar problem – not filling up the hard drive, but writing to it incessantly, thereby using up the drive’s I/O bandwidth – to the support software for a fancy mouse. Naturally I pulled the plug on that mouse and uninstalled its software. For your case… if the problem has been going on for a month, what have you added to the system in the last month? From Control Panel, you can go to “Uninstall a program”, and the table you’ll see there has clickable column heads for sorting. Sort by installation date and see what’s new.

Hope this helps! – Jamie Hanrahan

Jamie, today I was watching this Channel 9/MVA video about Windows Performance: https://channel9.msdn.com/Series/Windows-Performance/02

The section on physical and virtual memory, starting around 17:00, strikes me as something you could improve greatly.

Indeed, that section blurred a lot of terms. However I feel it necessary to point out that to really explain “Windows memory management” takes a significant amount of time. There’s no way anyone could do much better in a similar amount of time to what was offered there.